What is an ETL Pipeline?

In this helpful guide we'll discuss what an ETL pipeline is, why it's used, why ELT is a better option, and more.

Overview

With businesses looking to get insights from their data and gain a competitive edge, the pressure is on data engineering teams to process and transform raw data into clean, usable, and reliable resources for downstream use in analytics, data science, and machine learning initiatives.

The ETL pipeline provides a data framework for collecting, cleaning, and analyzing data, making it easier for businesses to gain insights into their operations and make data-backed decisions.

Data pipelines are an important part of modern data architecture. But we should make it clear upfront that the ETL process has largely been replaced by the similar but more modern ELT process. You can read more about the differences between the two here. But much of what you will read in this article applies to both ETL and ELT.

What is ETL?

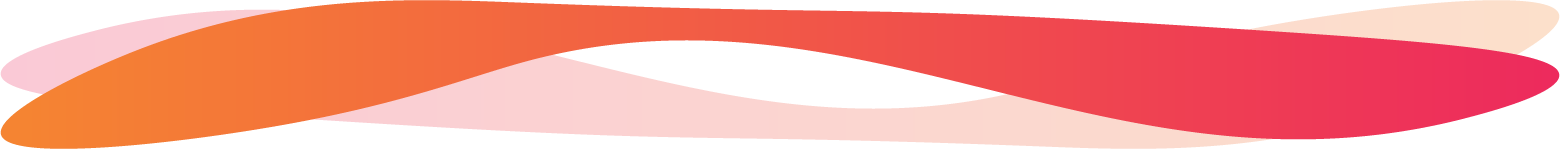

ETL, or Extract, Transform, Load, is a set of processes that involves extracting data from different sources, transforming it as a reliable resource, and loading it into destination systems.

Data Pipeline vs. ETL Pipeline... People often use the terms 'data pipeline' and 'ETL' interchangeably, but there is a distinction. A data pipeline is an umbrella term that covers all types of pipelines, including ETL, streaming ETL, and ELT.

Local or in-house ETL frameworks require in-house data engineers and network experts to keep the operations going. However, it is difficult for small and midsized businesses to bear the cost of managing in-house resources and ensuring security and compliance in local deployment.

Cloud ETL is based on distributed computing resources to ensure flexibility and efficiency. The most significant aspect of cloud ETL is it possesses no upfront costs, making it favorable for businesses of all sizes. Cloud ETL also provides enterprise-grade security to ensure regulatory compliance.

ETL Pipeline Use Cases

If implemented efficiently, ETL data pipelines can help break down data silos and offer a single point of coordination for businesses to rely on. Below are critical ETL pipeline use cases that give you more control over your data and resources.

- Cloud Migration and Cleaning – ETL pipelines enable data migration from legacy systems to the cloud with optimized utilization of resources. They are used to centralize relevant data sources to ensure a complete, normalized, and cleaned version of the updated records.

- Integrating Data Into the Centralized Repository – ETL pipelines are used to fetch data from disparate sources to integrate data from different sources in data lakes or warehouses for data analytics and visualization.

- Business Intelligence – ETL pipelines transform data in a structured format and prepare and clean it to perform analytics and derive valuable insights using different BI tools.

- Enriching CRMs – ETL pipelines can fetch data from different customer-facing systems and converge it into a centralized repository to evaluate marketing KPIs concerning campaign results, customer behavior, engagements, ROI, etc.

- Internet of Things – They also fetch real-time data from IoT devices through stream processing and push it to the target database for businesses to gather meaningful insights and make informed decisions.

Phases in the ETL Process

The ETL process consists of three main steps: Extract, Transform, and Load.

Extract

The first step involves exporting data to the staging area from various sources such as business systems, APIs, sensor data, CRMs, ERPs, transactional databases, etc. The data from the sources may be structured, semi-structured, or unstructured. Data connectors are often used to automate the data collection process.

There are two different ways to perform data extraction in the ETL process.

- Partial Extraction – In partial extraction, only a subset of the data will be extracted to the target system, as opposed to the entire dataset. This can be done using a notification method where the source sends an update notification to the system when the data is being modified. Then, only the modified data is extracted. This method increases efficiency, reduces complexity, and ensures higher data quality.

- Full Extraction – In some systems, the data structures and framework make it difficult to find updated records. In this case, you opt for full extraction. The extracted data needs data normalization to avoid duplication of records. This method can be much more time-consuming and resource-intensive than partial extraction.

Transform

In the staging area, data transformation involves converting and processing the extracted raw data into a format that different applications can use. The data is filtered, cleansed, and transformed into a supported format to prepare for further operations.

This step also ensures the data's quality, compliance, normalization, and integrity.

Learn more about data transformation:

Load

The loading step involves writing the transformed data from the staging area to a target database. There are two ways to load data into the target system.

- Full Loading – This pushes all records from the staging area to the target database. By doing so, your data undergoes exponential growth, and eventually, it becomes challenging to manage the overwhelming volume of data.

- Incremental Loading – This involves only loading additional or updated records to keep the data manageable. It is simpler and more manageable than full loading.

Benefits of ETL Processes

ETL processes involve different advantages that allow businesses to use, analyze, and manage data more efficiently and effectively. Some advantages include:

- Resource Optimization – ETL pipelines eliminate manual intervention by streamlining data processing and improving data quality, leading to better resource utilization.

- Improved Compliance – ETL pipeline allows businesses to ensure data privacy and protection compliances by following industry regulations and encryption protocols.

- Simplified Migration to Cloud – ETL helps in migrating data to the cloud by seamlessly transforming and loading data into desired formats and locations.

- Efficient Analysis – ETL solutions allow businesses to transform raw data into structured and organized datasets that are easier to analyze, leading to more efficient and accurate data analysis and business intelligence.

- Clean and Reliable Data – ETL pipelines ensure that the final data loaded into the system remains clean from all redundancies, inconsistencies, and duplications so that businesses can access updated and reliable information to drive their business-critical operations.

Challenges with ETL

The ETL infrastructure poses some significant challenges and limitations. The primary reason behind these limitations is that the 'transform' process carried out during the data transition at a middle layer makes ETL design more complex. Some of these challenges include:

- Building and maintaining reliable data pipelines is slow and difficult

- Complex code and limited reusability of pipelines

- Managing data quality in increasingly complex pipeline architectures is challenging

- Insufficient data is often allowed to flow through a pipeline undetected

- Extensive custom code is required to implement quality checks and validation at every step of the pipeline.

- Increased operational load in managing large and complex pipelines

- Pipeline failures are difficult to identify and solve due to a lack of visibility and tooling

Some of the downsides associated with ETL pipelines are solved by implementing an ELT process, which is considered more modern. Zuar's platform employs ELT for data collection. Learn the difference between ETL vs. ELT:

ETL Best Practices

When building an ETL data pipeline, it's important to follow best practices to ensure long-term success for your data pipeline. Listed below are five best practices for ETL pipelines.

- Data Profiling – Prior to the extract stage of ETL, data profiling is recommended. This entails reviewing your source data to determine its quality, structure, and relationships with other data. This process helps to eliminate errors and ensures that your data is high-quality before being extracted.

- Incremental Loading – As mentioned above, incremental loading is considered a best practice because it takes less time, is less resource-intensive, and can lead to fewer errors in your data.

- Data Validation – This is the process of validating that the data has been loaded into your target system correctly. After completing the ETL process, it's important that all the data is accurate, consistent, and complete.

- Error-Handling – There needs to be processes and mechanisms in place that detect and report errors that occur in the ETL pipeline. Errors in data pipelines can lead to disruptions in day-to-day operations, so it's important to be able to identify and fix them as quickly as possible.

- Documentation – It's also important to document all aspects of the ETL pipeline. Where the data is coming from, how the data is being mapped, and how the data is being transformed are all examples of information that should be well-documented. This ensures that others in the organization can easily understand the data pipeline and that it can be maintained in the long term.

Automation & Optimization with Fully-Managed ELT

With the increasing complexity of applications and networks, businesses need easy-to-use, secure, and scalable cloud solutions. Fully-managed and streamlined ELT solutions are almost universally preferred over ETL solutions due to their better flexibility, scalability, and cost-effectiveness.

To embrace hassle-free and streamlined data architecture, opt for the fully-managed Zuar ELT platform, which automates end-to-end data workflows. By automating every stage of your data pipeline, you can focus on what matters; making data-driven decisions that drive your business forward.

Contact Zuar and schedule your free data strategy assessment:

Need help with implementation? Zuar's is here to help: