What is Google Cloud Composer?

Get an overview of Google Cloud Composer, including the pros and cons, an overview of Apache Airflow, workflow orchestration, and frequently asked questions.

Overview of Cloud Composer

Google Cloud Composer is a scalable, managed workflow orchestration tool built on Apache Airflow. Offering end-to-end integration with Google Cloud products, Cloud Composer is a contender for those already on Google’s platform, or looking for a hybrid/multi-cloud tool to coordinate their workflows.

Key Features of Cloud Composer

- Multi-cloud: While the biggest sell for Cloud Composer is its tight integration with Google Cloud Platform (GCP), it still maintains hybrid functionality for orchestrating workflows across cloud providers or on-prem servers.

- Open source: Since Cloud Composer is built on Apache Airflow, the underlying functionality is open source, giving users freedom from lock-in and portability.

- Integrated: Cloud Composer comes packed with built-in integration for BigQuery, Dataflow, Dataproc, Datastore, Cloud Storage, Pub/Sub, AI Platform, and more.

- Pure Python: Airflow (and Cloud Composer by extension) allows for representations of DAGs (see below) as Python code.

- Fully managed: Cloud Composer's managed nature allows you to focus on authoring, scheduling, and monitoring your workflows as opposed to provisioning resources.

What is Apache Airflow?

To understand the value-add of Cloud Composer, it’s necessary to know a bit about Apache Airflow. Airflow is an open source tool for programmatically authoring and scheduling workflows.

Over the past decade, demand for high-quality and robust datasets has soared. As businesses recognize the power of properly applied analytics and data science, robust and available data pipelines become mission critical. The increasing need for scalable, reliable pipeline tooling is greater than ever.

Apache Airflow presents a free, community driven, and powerful solution that lets teams express workflows as code. It acts as an orchestrator, a tool for authoring, scheduling, and monitoring workflows. For different technologies and tools working together, every team needs some “engine” that sits in the middle to prepare, move, wrangle, and monitor data as it proceeds from step-to-step. An orchestrator fits that need.

As companies scale, the need for proper orchestration increases exponentially— data reliability becomes essential, as does data lineage, accountability, and operational metadata. Airflow’s concept of DAGs (directed acyclic graphs) make it easy to see exactly when and where data is processed. Best of all, these graphs are represented in Python. They can be dynamically generated, versioned, and processed as code. It’s also easy to migrate logic should your team choose to use a managed/hosted version of the tooling or switch to another orchestrator altogether. Given the necessarily heavy reliance and large lock-in to a workflow orchestrator, Airflow’s Python implementation provides reassurance of exportability and low switching costs.

Data teams may also reduce third-party dependencies by migrating transformation logic to Airflow and there’s no short-term worry about Airflow becoming obsolete: a vibrant community and heavy industry adoption mean that support for most problems can be found online. Together, these features have propelled Airflow to a top choice among data practitioners.

A full-featured ELT platform might be a better fit for your data pipeline needs.

Workflow Orchestration

As previously mentioned, Airflow’s primary functionality makes heavy use of directed acyclic graphs (DAGs) for workflow orchestration. A directed graph is any graph where the vertices and edges have some order or direction. A directed acyclic graph is a directed graph without any cycles (i.e., no vertices that connect back to each other).

Airflow uses DAGs to represent data processing. Each vertex of a DAG is a step of processing, each edge a relationship between objects. The nature of Airflow makes it a great fit for data engineering, since it creates a structure that allows simple enforceability of data engineering tenets, like modularity, idempotency, reproducibility, and direct association.

Airflow is built on four principles to which its features are aligned:

- Scalable: Airflow has a modular architecture and uses a message queue to orchestrate an arbitrary number of workers.

- Dynamic: Airflow pipelines are defined in Python, allowing for dynamic pipeline generation. This allows for writing code that instantiates pipelines dynamically.

- Extensible: Easily define your own operators and extend libraries to fit the level of abstraction that suits your environment.

- Elegant: Airflow pipelines are lean and explicit. Parametrization is built into its core using the powerful Jinja templating engine.

Google Cloud Operators

Airflow has pre-built and community-maintained operators for creating tasks built on the Google Cloud Platform. Google Cloud operators + Airflow mean that Cloud Composer can be used as a part of an end-to-end GCP solution or a hybrid-cloud approach that relies on GCP. Tight integration with Google Cloud sets Cloud Composer apart as an ideal solution for Google-dependent data teams.

Cloud Composer Pros & Cons

Cons

- Not optimal for non-GCP teams: For teams that don’t rely on Google Cloud Platform, there are a host of other managed Airflow services available. Astronomer is a good example. Though Cloud Composer is multi-cloud, little separates Google’s tooling from others when you overlook the GCP integration.

- Ambiguous Pricing: Using Cloud Composer as a part of GCP lacks a clear pricing structure. As you can see, Google’s pricing page is incredibly complex. This means that you’ll need to test Cloud Composer as a part of your workflow to understand exactly what it will cost. Furthermore, optimizing Cloud Composer costs may be an in-depth operation requiring a substantial effort. There are other data pipeline products that offer the benefit of essentially fixed pricing.

- Built on Airflow: Though Airflow is a very popular orchestration tool, there are a few downsides to using the platform:

- Requires an effective knowledge of Python: While most data/analytics engineers will have a working knowledge of Python, it’s important to note that Airflow is built entirely in the Object-Oriented language. If your team operates in other languages, Airflow may add complexity to your workflow.

- No paid support: Google support is already infamous for certain aspects of GCP, but one can expect little technical support with the nuances of Airflow. Open source support is often limited to forums and other online sources.

- Requires specialized infrastructure knowledge: Airflow is a technically complex product. Topics like authentication, non-standard connectors, and parallelization require the specialized knowledge typically held by a data engineer. So Airflow and Cloud Composer are not a good fit for teams seeking a low-/no-code solution.

- Long-term support is ambiguous: While open source software has its benefits, long-term support is not guaranteed. Should industry trends shift away from Airflow, support may dissipate. If migration to another tool/language is required, it could be difficult to reproduce or refactor DAGs.

- Not user friendly: For example, each transfer operator has a different interface, and mapping from source to destination is different for each operator.

- Lack of integrations: Data transfer operators cover a limited number of databases/lakes/warehouses and almost no business applications, so its uses are limited.

- The kitchen sink: We've also heard from users that the lack of versioning is problematic, that Airflow isn't intuitive, setup is difficult, sharing data between tasks is burdensome, etc.

Pros

- Tightly integrated with GCP: The biggest feature of Google Cloud Composer that sets it apart from other managed Airflow instances is its tight integration with the Google Cloud Platform. For heavy users of Google’s cloud products, Cloud Composer is a very attractive approach for those who require an Airflow implementation.

- Built on Open-Source Airflow: Cloud Composer is built on Apache Airflow, a popular open source framework for workflow orchestration and management. This provides community support, an extensible framework, and no lock-in to proprietary vendor tooling. Updates are semi-frequent and most questions can be answered from a simple online search.

- Python: Airflow is managed in pure Python. DAGs are defined with code, bringing version control, object-oriented programming and reproducibility to workflow management.

- Fully Managed: Cloud Composer is fully managed. Users can focus on building the best orchestration and workflow possible, without worrying about resource provisioning or upkeep.

When to Use Cloud Composer

Cloud Composer has a number of benefits, not limited to its open source underpinnings, pure Python implementation, and heavy usage in the data industry. Nonetheless, there are inherent drawbacks with open source tooling, and Airflow in particular.

Your data team may have a solid use case for doing some orchestrating/scheduling with Cloud Composer, especially if you're already using Google's cloud offerings. But most organizations will also need a robust, full-featured ETL platform for many of it's data pipeline needs, for reasons including the capability to easily pull data from a much greater number of business applications, the ability to better forecast costs, and to address other issues covered earlier in this article.

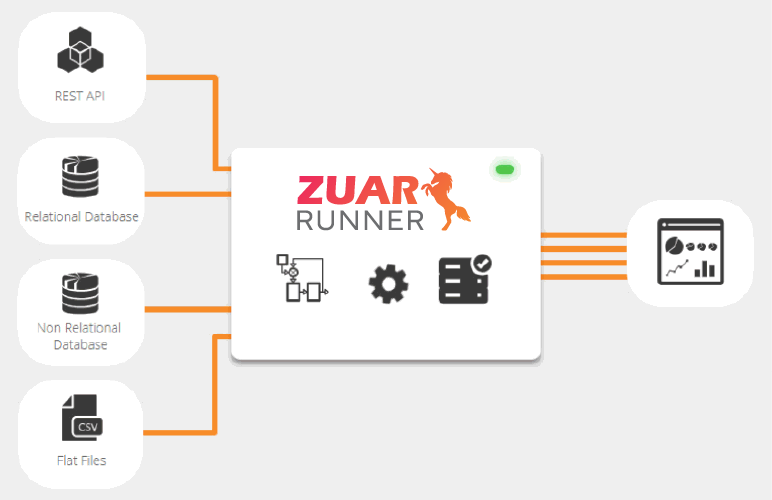

Zuar offers a robust data pipeline solution that's a great fit for most data teams, including those working within the GCP. Our ELT solution Zuar Runner will transport, warehouse, transform, model, report, and monitor all your data from hundreds of potential sources, such as Google platforms like Google Drive or Google Analytics. Schedule a free consultation with one of our data experts and see how we can maximize the automation within your data stack.

FAQ

What is a Cloud Composer environment?

A Cloud Composer environment is a self-contained Apache Airflow installation deployed into a managed Google Kubernetes Engine cluster.

How do I use Google Cloud Composer?

To start using Cloud Composer, you’ll need access to the Cloud Composer API and Google Cloud Platform (GCP) service account credentials. Those can both be obtained via GCP settings and configuration. From there, setup for Cloud Composer begins with creating an environment, which usually takes about 30 minutes.

What is Cloud Composer DAG?

A directed graph is any graph where the vertices and edges have some order or direction. A directed acyclic graph (DAG) is a directed graph without any cycles, i.e. no vertices that connect back to each other. Airflow’s primary functionality makes heavy use of directed acyclic graphs for workflow orchestration, thus DAGs are an essential part of Cloud Composer. Cloud Composer DAGs are authored in Python and describe data pipeline execution.

How does Cloud Composer work?

Cloud Composer is built on Apache Airflow and operates using the Python programming language. Cloud Composer instantiates an Airflow instance deployed into a managed Google Kubernetes Engine cluster, allowing for Airflow implementation with no installation or management overhead.

Is Cloud Composer the same as Airflow?

No, Google Cloud Composer is a scalable, managed workflow orchestration tool built on Apache Airflow.

How do I turn off Cloud Composer?

To disable the Cloud Composer API: In the Google Cloud console, go to the Cloud Composer API page. Click Manage. Click Disable API.