Power BI Data Flows: A Complete Guide

In this step-by-step guide, Zuar explains what Power BI Data Flows are, the main types of data flows, and best practices for implementing them.

Overview

Are you struggling with data management in your organization? Power BI Data Flows could be the solution you need. This powerful tool is designed to simplify data integration and transformation, making it easier to create and manage Power BI datasets, reports, and visualizations.

In this blog post, we’ll explore the ins and outs of Power BI Data Flows and how they can revolutionize your organization’s data analytics capabilities to drive revenue.

Key Takeaways

- Power BI Data Flows provide efficient data management through two primary categories, Standard and Analytical.

- Data Flow features include reusability, centralization, collaboration, query folding, and incremental refresh for optimal performance.

- Power BI Data Flows simplify and streamline data preparation and management.

- Power Query Online enables connection to over 80 sources and integration with other Microsoft services such as Azure Data Factory and Power Apps/Excel.

Understanding Power BI Data Flows

Power BI Data Flows are independent data transformation engines that can significantly enhance the development and maintenance of your Power BI solution.

They can be utilized within the organization to create modular ETL/ELT pipelines, preparing datasets for use in Power BI Desktop and other tools. These Power BI pipelines are especially valuable for organizations dealing with diverse data sources and complex data cleansing and transformation requirements.

Data Flows can:

- Establish a single source of truth

- Ensure that all reports and downstream artifacts adhere to the same logic

- Ultimately construct a centralized data warehouse for a Power BI solution.

How Data Flows Work

As cloud-based data transformation services, Power BI Dataflow uses Power Query to process data independently of Power BI datasets, allowing for more efficient data management.

Users can generate datasets in Power BI by selecting a data source, transforming the data, and publishing the Data Flow. These datasets can subsequently be used in a Power BI desktop file.

The two primary categories of Data Flows, Standard and Analytical, offer different capabilities for creating Power BI models.

Benefits of Using Data Flows

Data Flows offer numerous advantages, including:

- Centralized Data: All data preparation logic is stored centrally.

- Reusability: Data Flows can be reused in multiple reports.

- Improved Data Accuracy: Data cleansing and transformations are consistent.

- Collaboration: Multiple users can collaborate on data preparation.

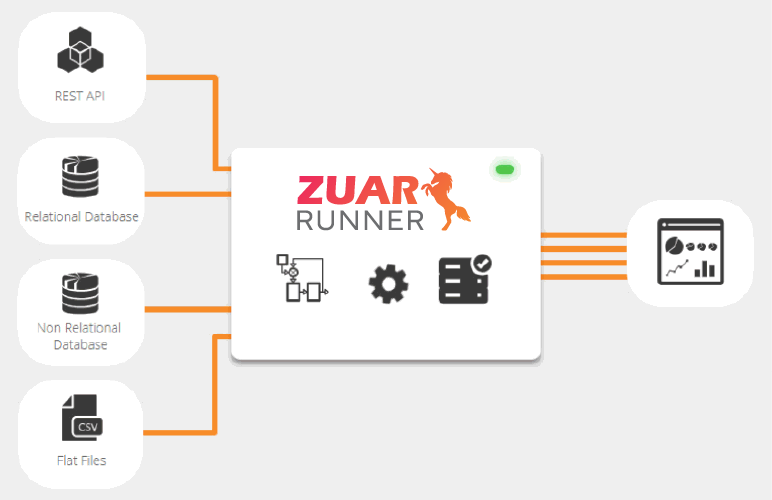

If you're looking for a more robust solution for managing the flow of data, consider implementing an end-to-end data pipeline solution like Zuar Runner.

This solution can automate the collection of data from hundreds of potential sources and consolidate them in one destination, fully prepped for visualization platforms such as Power BI. Learn more about Zuar Runner...

Creating and Managing Power BI Data Flows

Creating and managing Data Flows within Power BI workspaces is a straightforward process. This section will guide you through the process of selecting a data source, defining new entities, and managing data flows to guarantee optimal efficiency and collaboration.

Data Flow Creation Process

To create a new data flow, you will need to do the following:

- Define Data Sources: Connecting to various data sources.

- Data Transformation: Applying transformations using Power Query Online.

- Entity Creation: Structuring data into entities.

Once these steps are complete, save the data flow, making it available for use in generating datasets in Power BI.

Managing Data Flows in Workspaces

Effectively organizing and maintaining data flow within Power BI workspaces is crucial for optimal efficiency and collaboration.

Creating, editing, deleting, setting permissions, and sharing data flow with other users leads to a streamlined data solution that aligns with your organization’s needs.

Implementing clear and descriptive names for data flows also helps stakeholders and new users easily identify and understand the content of the reports.

Power Query Online: The Engine Behind Data Flows

Power Query Online, also known as the power query editor, is the engine that powers data flows, offering access to more than 80 different data sources and a powerful graphical user interface (GUI) for data transformation.

This functionality is essential for creating an effective Power BI model, which is a crucial component of the Power BI service.

The upcoming sections will examine the capabilities of Power Query Online, focusing on how it empowers data flows to perform data transformations and establish connections to various data sources.

Transforming Data With Power Query Online

Power Query Online allows users to perform various data transformations, from simple filtering and sorting to more complex operations like merging or pivoting data.

The graphical interface makes it easy for users to acquire data from sources and apply transformations, while also offering the option to change data types directly within the interface.

With Power Query Online, users can easily transform their data to meet the needs of their business and create insightful Power BI reports for analytics.

Supported Data Sources

Power Query Online is compatible with a wide range of data sources, making it a versatile tool for data transformation within data flows. Some supported data sources include:

- Azure databases like SQL Database and Azure Synapse Analytics

- Flat files like Excel and CSV

- OData feeds

- XML

- JSON

- SharePoint folders

- Online services like Google Analytics, Facebook, and Twitter

By leveraging these various data sources, users can create data flows that accommodate the unique data requirements of their organization.

Standard vs. Analytical Data Flows

Data Flows come in two primary categories: Standard and Analytical. While both types serve to simplify data integration and transformation, they offer different features and use cases.

This section compares and explores the unique capabilities of Standard and Analytical Data Flows.

Standard Data Flows

Standard Data Flows provide basic features for data ingestion and preparation. They are primarily used for storing data in Dataverse, a cloud-based storage solution that serves as a single source of truth for data within the organization.

By using a common data model, Dataverse ensures consistency and seamless integration across various applications and services. This is suitable for general-purpose data preparation and self-service data transformations.

Standard Data Flows are ideal for simple data transformations and processing, making them a suitable choice for organizations with straightforward data requirements.

Analytical Data Flows

Analytical Data Flows, on the other hand, offer advanced capabilities for data analysis and machine learning.

These Data Flows are designed for organizations that require more complex data transformations and aggregations, as they store both data and metadata in Azure Data Lake Storage and allow for the use of computed entities and AI functions. Designed for advanced data modeling, DAX calculations, and data transformations.

Analytical Data Flows are optimal for organizations seeking to harness the full potential of their data for analytics and decision-making purposes.

Linked and Computed Entities in Data Flows

In Data Flows, Linked and Computed Entities serve different purposes and play crucial roles in data management and transformation. Linked Entities enable seamless data sharing between departments, while Computed Entities facilitate advanced data aggregation and transformation.

This section explores the functionality of both Linked and Computed Entities, focusing on their contributions to the efficiency and effectiveness of data flows.

Linked Entities

Linked Entities are entities created in one Power BI Data Flow and used as a source in another, allowing for the reuse and reference of data across different Data Flows.

This streamlines the data transformation process and promotes collaboration across departments, as users can access and leverage the same data without the need for additional transformation logic. This will enhance the power of relationships in your model.

By implementing Linked Entities, organizations can minimize duplication of efforts and enhance overall data management efficiency.

Computed Entities

Computed Entities, on the other hand, are entities that reference linked entities and utilize in-storage calculations for increased performance and scalability. These entities leverage the linked entities’ data to execute calculations and generate new data in accordance with predefined logic.

By incorporating Computed Entities into Data Flows, organizations can perform advanced data aggregation and transformation, leading to more intricate and powerful analytics capabilities.

Power BI Data Flow Limitations

While Power BI data flows offer a powerful way to prepare and transform data for use in Power BI reports and dashboards, they do have some limitations, including:

Data Source Limitations: Data flows can connect to various data sources, but not all data sources are supported. Some sources may not be accessible directly through data flows, requiring you to first load the data into a supported source and then use it in your data flow.

Data Volume and Performance: Data flows are designed for self-service and small to medium-sized datasets. They may not be suitable for extremely large or complex datasets as they could impact performance.

Refresh Rate: Data flows are typically refreshed daily in Power BI Service, with some premium capacity workspaces allowing more frequent refreshes. This might not be suitable for scenarios requiring real-time or near-real-time data.

Dependency on Power BI Service: Data flows are managed and maintained within the Power BI Service, so they cannot be used offline or independently of the service.

Many of the limitations of Power BI data flows can be solved by implementing a more robust ETL/ELT solution, like Zuar Runner. We recommend carefully considering your own data requirements before implementing Power BI data flows.

Data Flow Best Practices

Following best practices when working with data flows is vital to ensure optimal performance and efficiency.

This section shares tips and strategies for handling large data volumes, implementing incremental refresh and query folding, and managing data flows effectively within Power BI workspaces.

Handling Large Data Volumes

Ensuring optimal performance and efficiency in your data flows is essential when working with large data volumes. Power BI’s scalable infrastructure allows users to effectively manage large data volumes for processing and analytics.

By following best practices, such as partitioning datasets and optimizing data retrieval, you can ensure fast and efficient data processing, even with substantial datasets.

Incremental Refresh and Query Folding

Techniques such as incremental refresh and query folding can greatly improve the efficiency of your data flows. Incremental refresh allows for the refreshment of only new or updated data in a data flow, rather than the entire dataset, improving the efficiency of the data refresh process.

Query folding, meanwhile, optimizes the data transformation process by pushing the transformation logic down to the source system, enhancing the performance of the data flow.

By implementing these techniques, you can significantly improve the efficiency and performance of your data flows.

Integrating Data Flows With Other Microsoft Services

Data Flows can be integrated with various Microsoft services, such as Azure Data Factory, Power Apps, and Excel, to further enhance your organization’s data management and analytics capabilities.

This section explores how to maximize the potential of your data solutions by utilizing data flows in conjunction with these services.

Data Flows and Azure Data Factory

While both Power BI Data Flows and Azure Data Factory (ADF) are cloud-based solutions for executing ETL tasks, they differ in terms of scope and application. Data flows are specifically tailored for Power BI workspaces, while ADF wrangling dataflows can be used in a broader range of scenarios.

By integrating data flows with Azure Data Factory, you can generate data-driven workflows for moving, transforming, and processing data from various sources, creating a more comprehensive data solution.

Data Flows in Power Apps and Excel

Data Flows can also be leveraged in Power Apps and Excel to facilitate data management and analysis. By utilizing data flows in Power Apps, users can create custom business applications that enable access, transformation, and processing of data from multiple sources.

In Excel, data flows can be employed to generate spreadsheets that facilitate access, transformation, and processing of data from various sources, providing users with valuable insights for business success.

Optimizing the Flow of Your Data

Power BI Data Flows provide a powerful and flexible solution for data integration, transformation, and analysis. By leveraging the capabilities of data flows, organizations can streamline their data management processes, enhance collaboration, and unlock the full potential of their data.

However, Power BI data flows do come with some limitations, such as a limited selection of data sources, potentially suboptimal refresh rates, and performance difficulties with large datasets.

That's why we recommend considering our automated data pipeline solution Zuar Runner. This solution is designed to be used by organizations of all sizes and has the ability do collect data from hundreds of potential sources.

Additionally, our solution Zuar Portal can provide secure, global access to all the data you need. It is the fast, scalable way to create unique analytics hubs for executives, employees, vendors, customers, and more.

Learn more about how these solutions can modernize your data stack by scheduling a consultation with one of our data experts!