AWS EC2 Monitoring With CloudWatch, SNS, Lambda & Slack

Learn how to subscribe a custom Lambda function, that formats a slack message and makes a POST request to a slack channel.

Amazon Web Services (AWS) Elastic Cloud Compute (EC2) provides scalable and secure compute resources. The service allows users to launch virtual servers on-demand, configure security and networking, and manage storage. EC2 scales up or down to handle changes in requirements or spikes in popularity, reducing the need to forecast traffic.

When managing a large number of EC2 instances, there is little visibility into instance behavior. Monitoring beyond the EC2 console needs to be configured using services like CloudWatch, CloudTrail, etc.

Zuar manages hundreds of EC2 instances across three regions running our products— Zuar Runner and Zuar Portal. To enable transparent reporting, we wanted Slack notifications on status changes in our #devops channel. This article will discuss our implementation and provide instructions for you to do the same!

If you're familiar with Terraform feel free to simply clone and edit this code.

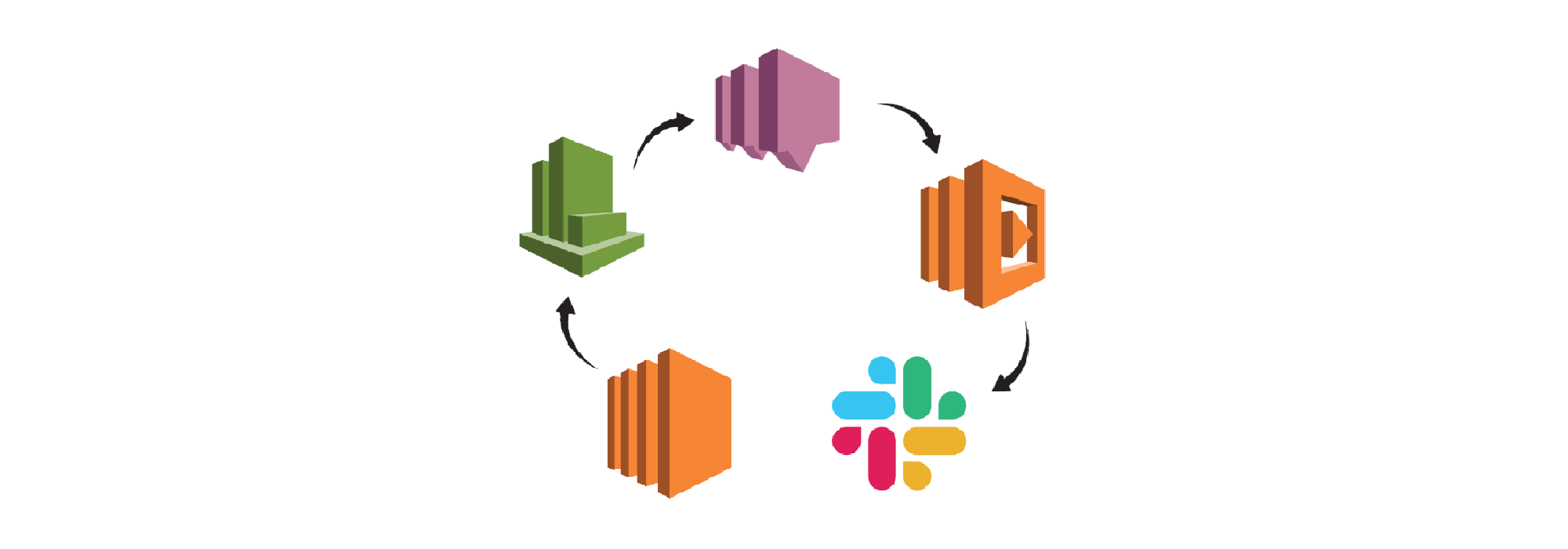

Overview

AWS CloudWatch can be used to create rules that publish to an SNS topic any time EC2 instance(s) have a state change.

SNS topics can send email alerts on publication. In our case, we'll subscribe to a Lambda that formats the Slack message then makes a POST request to our Slack channel.

Flow

- A user on our AWS account stops an EC2 instance.

- A CloudWatch Event Rule is triggered and published to a SNS Topic.

- SNS invokes a Lambda function with the CloudWatch message.

- The Lambda formats the message for Slack and makes a POST request to our webhook.

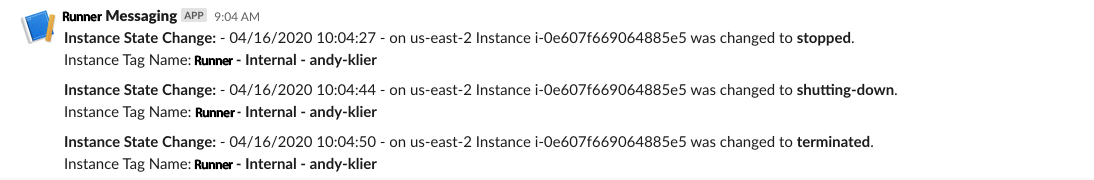

Results

The messages in our Slack channel should look something like this:

Slack Webhook

First, we need a Slack channel with an incoming webhook.

It should provide a link resembling:

https://hooks.Slack.com/services/UNIQUE_ID/UNIQUE_ID/UNIQUE_ID

We’ll use a similar link in our Lambda. We can test that the link is working using curl:

curl -X POST -H 'Content-type: application/json' --data '{"text":"Hello, World!"}' https://hooks.slack.com/services/UNIQUE_ID/UNIQUE_ID/UNIQUE_ID

Lambda Function

We’ll stay high-level on our Lambda implementation and avoid specifics of deployment, but we’ll need an execution role, a policy, and a policy attachment. Later we’ll add our trigger for SNS.

Our Lambda uses Python 3.6. There are 4 environment variables we will need to set: ACCESSKEY, SECRETKEY, REGION and SLACK_HOOK.

Below is the Lambda function:

import json

import os

from datetime import datetime, timedelta

from dateutil import tz, parser

import boto3

import requests

"""get info from sns and post to slack"""

ACCESSKEY = os.environ['ACCESS_ID']

SECRETKEY = os.environ['ACCESS_KEY']

REGION = os.environ['REGION']

SLACK_HOOK = os.environ['SLACK_HOOK']

def get_instance_name(fid):

"""When given an instance ID as str e.g. 'i-1234567', return the instance 'Name' from the name tag."""

ec2 = boto3.resource('ec2', aws_access_key_id=ACCESSKEY, aws_secret_access_key=SECRETKEY, region_name=REGION)

ec2instance = ec2.Instance(fid)

instancename = ''

for tags in ec2instance.tags:

if tags["Key"] == 'Name':

instancename = tags["Value"]

return instancename

def message_to_dict(message):

"""convert message to dict"""

message = message.replace("\"", "")

mdict = {}

for word in message.split(", "):

key, value = word.split("=")

mdict[key] = value

return mdict

def handler(event, context):

message = False

try:

message = event["Records"][0]["Sns"]["Message"]

except Exception as e:

print(e)

exit()

if "pending" not in message and "stopping" not in message:

url = SLACK_HOOK

mdict = message_to_dict(message)

d = parser.parse(mdict["time"])

d = d - timedelta(hours=5)

human_time = d.strftime('%m/%d/%Y %H:%M:%S')

instance_name = get_instance_name(mdict["instance_id"])

message = f'*Instance State Change:* - {human_time} - on {mdict["region"]} Instance {mdict["instance_id"]} was changed to *{mdict["state"]}*.\nInstance Tag Name: *{instance_name}*'

myobj = {'text': message}

try:

x = requests.post(url, json=myobj)

except Exception as e:

print(e)

exit()

return {

'statusCode': 200,

'body': json.dumps({'response':x.text}),

'headers': {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '*'

},

}

else:

return {

'statusCode': 200,

'body': json.dumps({'response':'pass'}),

'headers': {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '*'

},

}

NOTE: When packaging this for deployment, be sure to include requests as a dependency.

Simple Notification Service (SNS)

Next we’ll need to create a SNS topic and a subscription to our Lambda function.

In the SNS console click "Create Topic"— all we need here is a name. Once that's done, we’ll go to "Subscriptions" and create one, enter the ARN for the topic we created, select "AWS Lambda" as the protocol, and select the ARN for the Lambda.

CloudWatch Rule

In the CloudWatch console click on “Events > Rules” in the menu on the left. Select “Create Rule”, then “Event Pattern”— Service: “EC2”, Event Type: “EC2 Instance State-Change Notification”. Select “Any State” and “Any Instance” to publish to SNS on changes to any instance in this region.

On the right select “SNS Topic” at the top and select the relevant SNS topic. Below that select “Input Transformer” In the first text area use:

{"instance-id":"$.detail.instance-id","state":"$.detail.state","time":"$.time","region":"$.region","account":"$.account"}

and in the second:

"instance_id=<instance-id>, time=<time>, region=<region>, state=<state>"

Our Lambda function will expect the format above. It's time to do some testing!

Testing and Troubleshooting

To test everything we created, we can simply go to our EC2 console and start/stop a test instance in the correct region.

If a message isn’t posted to Slack, we can check the CloudWatch logs and ensure the function was called. If not, it's possible something is amiss with our SNS trigger. It may be worth double checking permissions, roles, and policies, since those are often missed.

Next Steps: Learn about...